Raw Material for Research

Fewer data silos, more exchange – computer science research is striving for a new data culture. The necessary infrastructure is being built up under the project management of the UDE.

By Birgit Kremer

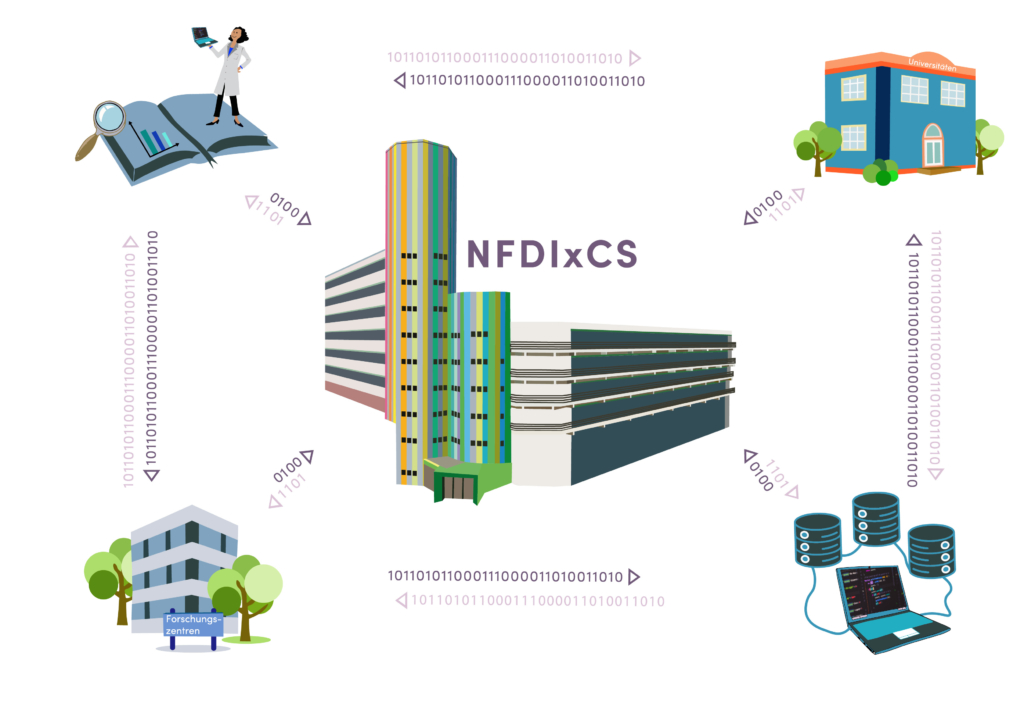

Research data is a valuable asset of scientific work and at the same time the raw material for generating new knowledge. Their production is costly and time-consuming, yet their potential is not fully exploited in Germany. This applies to computer science as well as to other research disciplines. Often, data pools are only accessible to a single project team but could also be useful for other researchers. ‘What we need is the ability to make available not just publications, but all the data generated in research projects,’ says Prof. Dr Michael Goedicke. The UDE professor from the software technology institute paluno leads the computer science consortium NFDIxCS, one of 27 alliances tasked with building a national research data infrastructure (NFDI – Nationale Forschungsdateninfrastruktur).

Recognizing the problem, the German government has introduced a real innovation into its scientific landscape with the NFDI: The federal and state governments are providing up to 90 million euros available each year until 2028 to secure research data in the long term and make it usable. The idea was that the necessary infrastructure should not be imposed on universities and non-university institutions such as Fraunhofer and Leibniz institutes but should be developed by the research community itself. This required the formation of consortia that could speak and act on behalf of their discipline. Goedicke and his colleagues succeeded in convincing around 50 partner institutions from all areas of computer science to join the NFDIxCS.

FROM SUPERCOMPUTERS TO MEGAPROJECTS

But what exactly is genuine computing research data? Obviously, computer science data is as diverse as the research questions it addresses. Technical computing studies the performance of PCs and supercomputers. The relevant data are therefore the results of e.g. performance measurements. Human-computer interaction, on the other hand, is less interested in what is inside computers than in what happens between people and machines. It often relies on human subject studies, which sometimes produce large amounts of images and sound recordings that are sensitive in terms of data protection. In other branches of computer science, software is not only used as a tool, but is itself the main object of interest. This is the case, for example, with software engineering, which deals with the design of software-intensive application systems and the organisation of large software projects.

Given all these differences, it is necessary to establish common standards. This includes, first and foremost, standardised descriptions with metadata, which are needed to find research data. Equally important is the creation of future-proof structures. In addition to computer science institutes, the consortium also includes large computing centres. Not only do they contribute expertise and computing power, but they can also ensure that the new infrastructures remain in place after the funding ends.

A TIME CAPSULE FOR RESEARCH DATA

The question of whether and how research data will be avail-able for use in the future also depends on the right software. Anyone who has opened a Microsoft Word document using a different version of the word processor will be familiar with the phenomenon: formatting is lost, and images appear in unexpected places in the text. This problem also occurs when processing seemingly straightforward formats such as CSV files, which store measurements without formatting or other information about the meaning of the data. As a result, when different versions are processed, the values may be rounded differently. In general, reproducibility of research data can only be guaranteed if the exact software version used to generate the data is still available.

The NFDIxCS consortium therefore aims to preserve not only the research data, but also everything that is needed to use a particular software version, in a kind of time capsule called the Research Data Management Container (RDMC). This includes the operating system and the libraries used. The consortium is also working on solutions for the key to the capsule, so that researchers can access it at any time, regardless of their institutional affiliation, in accordance with transparent access rights and data protection laws.

A LIVING DATA CULTURE

In addition to technology, the key to the NFDI’s success is the human factor. Goedicke has one group in particular in mind: ‘Most of the work and the real impetus for research comes from early-stage researchers,’ he says. ‘So, we want to train undergraduates and postgraduates in dealing with research data.’ An awareness of sustainable data management should enable them to act in and influence their environment. After all, a new data culture must be a living culture. Otherwise, even the best technology is useless.

The aim of the NFDI is to systematically develop the data resources of science and research, to secure them for the long term and to make them accessible (inter)nationally. It is being built up by independent consortia from the scientific community. The German federal and state governments are funding the project with up to 90 million euro per year from 2019 to 2028.

Main image: © unsplash